Fish Fight Netcode Explained (part 1)

For our netcode in Fish Fight, we use a variant of deterministic lockstep called Delayed Lockstep. It consists of a peer-to-peer architecture running a deterministic game, which requires very little bandwidth thanks to only sending input between the peers.

This video was the main inspiration for our netcode architecture:

What is described from 6:09 to 7:15 is the current state of our netcode. The video proceeds to explain the limitations of this approach and how they iterated from there to what can more accurately be called Deterministic Rollback (of which a common implementation is GGPO). That is where we are headed eventually, but we are beginning with a minimal foundation which we have a full grasp and agency over. From the intentionally naive “Player Unhappy” model we will gradually work our way towards “Player Very Happy”.

But before we go any further, let’s explain some terms and concepts.

First off, what is that makes networking games so difficult? At its core, there are two main issues: the latency (travel time) for information over the internet, and when circumventing that, ensuring that nobody can cheat while fairly integrating everyone’s input. In other words, the game must feel responsive even though it takes time for your actions to travel to the server and other players. But many of the naïve solutions to this enable players to control the game and cheat however they wish, and therefore makes the problem a whole lot more difficult. Regardless, what’s important is often not that the solution is ”correct”, but that it feels great to your players. Remember that game networking consists largely of clever tricks and smoke and mirrors, all to disguise the inherent limits of spacetime.

Server-Client vs. Peer-to-Peer

A peer/client is someone who joins an online game session. Peers are usually a node in a peer-to-peer network (explained below) while clients are those who connect to a server. Most games these days use a server-client architecture, where clients will join a server that handles everything in the online game session, and streams necessary data to the clients.

However, this isn’t the only way of handling things. Indeed, before the server-client architecture became commonplace, there was the peer-to-peer (P2P) architecture. What that means is that instead of relying on an authoritative server to tell the clients what’s happening, the peers instead tell EACH OTHER what is happening. This works well on a small scale, but because any one peer has to send out data to every single other peer, the required bandwidth scales linearly as more players join. For this reason most games just use the server-client system for anything more than 6-8 players. However, for a game like Fish Fight which will only have 2-4 players in any one given match, a peer-to-peer system works just fine.

Using a P2P model has several benefits. First and foremost, there’s no need to pay for hosting. For a small project like Fish Fight, with a relatively simple simulation, that cost would likely be low. But there’s a plethora of problems that must be addressed: while small that cost isn’t zero and still has to be paid somehow, and if the game gets immensely popular the price will quickly skyrocket. And then you must also write a load distribution system, and get DDOS protection, the list goes on. A peer-to-peer architecture offloads all the costs to the players, who are in any case already running the simulation. This also means that you don’t need to maintain two separate client and server codebases, that must exactly line up in behaviour. Peer-to-peer might even offer lower latency, since the packets don’t have to go back and forth through a server.

As such, making a peer-to-peer game makes sense on FishFight's scale. However, any one who wants to make an online multiplayer game MUST understand that every multiplayer solution is specialised to your use case. The server-client architecture has many other benefits, mainly scalability but also for instance, in FishFight there is no persistent state or complex systems that must always be online. The ”servers” in P2P go offline as soon as a player quits, and therefore don’t support any always-online mechanics. Hosting your servers externally also means your players don’t need to worry about port forwarding, NAT punching or any of the likes to get past home firewalls.

Now, for some vocabulary:

The Authority is whoever decides what is true, and can overwrite anyone else’s state. The authority isn’t necessarily one party, but rather authority is held over singular objects or values. For example, a player might hold authority over a ball they are kicking, so they don’t have to ask the server for confirmation before each interaction resulting in a snappier experience. This isn’t exactly applicable in a lockstep P2P architecture, but is foundational for client-servers so you’ll likely see the term if you ever read anything about networking. In those cases the server is almost always the authority, so the players can’t cheat.

Listen Servers are a network architecture/topology not to be confused with peer-to-peer. It is simply a client-server architecture where one of the clients hosts the server. This combines the cost benefits of a P2P topology while also allowing for better lag compensation methods. It still requires the same bandwidth as P2P, but only for the client who is running the server so the game is no longer capped by the slowest connection.

Ping/Latency/Round Trip Time all relate to the time it takes for a message to travel over a network. Latency is the time it takes to travel from the sender to the recipient, while Round Trip Time (RTT) and Ping refers to the time it takes both back and forth. RTT however is not necessarily twice the latency, although often very close. On the network, different conditions and even routes means that times will vary.

Jitter is related to latency, and is the amount by which it varies. A bad connection does not only have long latency, but the time it takes for packets to arrive will also vary greatly. This is a major hindrance when you want data to arrive in a smooth and regular manner, such as frames in a video, sound in a voice chat or input for a player. This is managed by adding a Buffer, which stores values for a short while and then dispenses them like an even flow. The tradeoff is that the fastest packets are slowed down to be in time with the slowest packets, leading to an overall slowdown.

Then there’s Packet Loss, where a packet gets completely lost at some crossroad of the internet. Bad connections also means that not all packets will arrive. This is countered by adding Redundancy. Common ways to compensate is to send packets multiple times, so at least one is likely to arrive, or send a confirmation once a packet is received. If the confirmation is not received, resend the packet until you get a response.

For some slightly more low-level things that you can do without:

The Internet is a massive network of connected computers, utilising the IP Stack to communicate between them. This part isn’t exactly necessary to read but removes a lot of the magic and mystery of how things actually travel around. The IP Stack refers to a number of systems that each support the ones above to perform their function. At the bottom is the physical layer, which consists of the physical cables and antennas between hosts. Next is the link layer (eg. Ethernet), which is a basic protocol that can deliver messages to and from nodes using MAC addresses as well as some integrity checks and whatnot. But the link layer sends each packet to all other hosts, who then determine whether it’s intended for them. So then there’s the network layer (IPv4/6), which implements flexible IP addresses, and a way to translate them back and forth to MACs. Using this it can also route packets to other hosts than the ones directly connected to it. The Internet is a mesh network, so to send a message between two computers you will usually have to make many jumps between routers and gateways along the way. IPv4/6 handles this, among other things. By now you can send messages between any connected computers, so you can add the Transport Layer (a couple describe below), which implements ports allowing for single processes to communicate over the net as well as provide some other functionality like redundancy. Finally, on top of that, is the application layer where your program can actually send information! Each of these layers adds a bit of more overhead and processing to the packets being sent, that while slowing down communication are essential for it to happen at all.

TCP/UDP are transport protocols on top of the IP stack, and their comparison usually relates to packet loss and redundancy. UDP is an unreliable protocol that “simply” sends messages, fire-and-forget, with no regard to whether it arrives. TCP on the other hand is reliable and guarantees your messages will (eventually) arrive, but has greater overhead. FishFight uses UDP for speed, and implements a custom redundancy layer on top of that for extra performance. TCP is often overkill, and a custom built solution almost always works better since it can exploit the exact workings of your game. Overhead is the extra data that is sent every packet, and adds to the required bandwidth. By sending more data per packet, the overhead will make up a smaller part of the data sent.

Besides reliable/unreliable protocols there are also sequenced/unordered. UDP has no guarantee that the packets are received in the order sent, while TCP is Sequenced and will wait for past packets to arrive if future packets are received before them.

But before writing a custom solution, it’s worth considering the development cost of a custom solution; often TCP works excellent as long as not every millisecond or bit counts.

Delayed Lockstep

Anyways, we explained the peer-to-peer part, but you’re probably aching to know what Delayed Lockstep is! Gaffer on Games already wrote about this in an article you can read here, but in summary:

At its very basics, lockstep works by collecting input from every player and then simulating one frame once all input is gathered. In other words, the only thing that gets transmitted between players is their input. Input often can be packed into a single byte, and therefore very little bandwidth is required. When the input then arrives, the simulation is advanced one step. Since everyone waits for input from each other, everyone steps in sync.

But however small the packets are, the latency will remain largely the same. To wait for every player to send their input each time would mean that the game can not update faster than (in the best case) 1/RTT/2 Hz (confirmation can be sent later). If you want your game loop to run at 60Hz, you can’t have an RTT over 30 ms which is difficult to achieve outside of wired and geographically small networks.

Enter: Delayed Lockstep. The ”delay” part is an input buffer that stores inputs for a short while before executing them. Now every input packet also contains a frame timestamp so that all remote input can be matched up with the local input and executed at the same frame. As input rolls in it is stored in the matching slot in a buffer, and by the time a frame should be simulated the corresponding buffer slot should be filled with input from all players. The latest input is then popped off the buffer, which shifts one frame forward, and the game progresses one step. By maintaining this buffer (barring major interruptions) the connection becomes less sensitive to fluctuations and interruptions, but most importantly the game always has input at hand and can simulate as soon and as quickly as it wants. But as you might guess, there’s a tradeoff. That is of course the added delay. The remote players already have some delay so it doesn’t matter too much, but the local input must be slowed down to match the slowest player, leading to slower response to keyboard input. To minimise this delay, the buffer should be as short as possible. To give everyone’s input time to arrive the buffer must be as long as the slowest players ping + their jitter. To improve the experience, your networking should continually measure how long it is between the last player’s input arriving and the buffer running out, and then adjusting the buffer to be as short as possible. A too long buffer means unnecessary input delay, but if the buffer is too short and runs out the game must freeze until input arrives. It’s a fine line to walk, but it’s usually better to lean towards too long than having interruptions.

However, there’s one big, or rather HUGE, issue: Determinism. Since all that is sent between clients is their inputs, one requirement must be met. Given the same world state and inputs, every time, on every computer, OS, architecture, instruction set, etc. etc. the game must be able to generate the exact same next frame. The tiniest deviation accumulates and quickly causes the players' games to desynchronise from each other, making the game unplayable. The main source of nondeterminism is floating point arithmetic. Performing operations with floating point numbers famously produces varying results, which depends on many factors. Random number generators must also be kept in sync, by seeding them with the same value and generating random numbers in the same order.

With all the pieces in place, we are finally ready to go through Fish Fight's actual implementation, and see how it's really done!

- - -

This concludes part 1 of our "Netcode Explained" series. In part 2 we will do a code walk-through and piecemeal analysis.

Written by Srayan “ValorZard” Jana and grufkork, with editing by Erlend

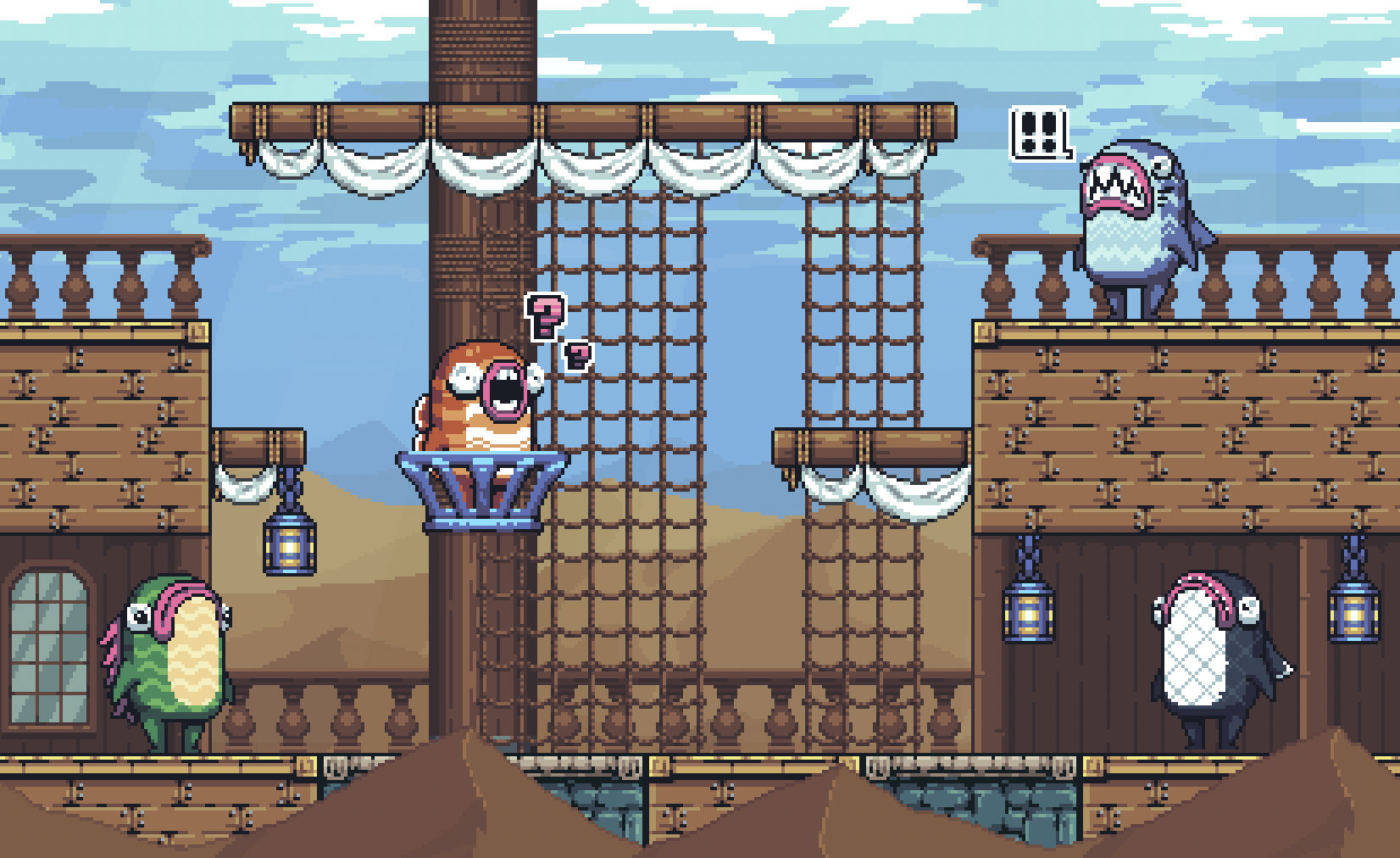

Fish Folk: Jumpy

Tactical 2D jump-'n-shoot for 2-4 players.

| Status | In development |

| Author | Spicy Lobster |

| Genre | Fighting, Action, Platformer |

| Tags | Multiplayer, Open Source, Pixel Art, Tactical |

| Languages | English |

More posts

- Launched on Kickstarter - united against lonelinessMay 26, 2023

- Welcome to the world of Fish FolkAug 03, 2022

- Spicy Lobster (Open Gamedev) CompanyMar 22, 2022

- Open Gamedev SchoolFeb 10, 2022

- Everlasting GamesJan 22, 2022

- Fish Fight's past, present and futureJan 06, 2022

- Open Hiring and $100 internshipsSep 14, 2021

- Fish Fight is open sourceSep 06, 2021

Comments

Log in with itch.io to leave a comment.

The article seems duplicated. Anyway, I am waiting for the next part!